Progress Report December 2023

Happy New Year!

We hope everyone is having a great start to 2024 and that you’re all rejuvenated for another year of listening to these (mostly) regular rambles. We’ve got a fair bit to go through, including the usual sprinkling of graphical fixes, a nice meaty section that users of battery-powered devices will want to read and, of course, our usual yearly wrap up on a few different topics, including compatibility and performance across 2023.

We’ll start out with a certified retro title in Monster Hunter Rise (MHR). Released in the antiquated year of 2021, the game has received several updates and DLC throughout its life, the newest of which has been a pain to emulate since its release. From the ‘Sunbreak’ DLC and updates onwards, MHR would crash on boot before reaching the title screen with an error that was very complicated to solve within the buffer cache.

The old implementation made the assumption that all the memory regions where buffers could be located are contiguous (they’re next to each other). In most cases this assumption is correct, but if you can guess a title where it isn’t, then you probably have average pattern recognition skills. To solve this, support was implemented for Vulkan’s spare mapping feature, which allows multi-range buffers to be created from multiple physical buffers.

Perhaps the obvious downside here is that the feature is limited to Vulkan. OpenGL does technically support sparse mappings, but doesn’t allow you to choose where it will be mapped, making it effectively useless for our use case. Metal on macOS is in the same boat as OpenGL; while supported, it does not provide enough control of the buffer mapping to be viable, with the limitation thus extending to MoltenVK too.

For devices and drivers that fully support Vulkan (Nvidia, Intel and AMD) however, Sunbreak and onwards is finally playable!

Fashion Designer, a game which we’re sure will be topping Game of the Year charts across the globe, was exhibiting a particularly strange glitch.

While we’re all huugggge fans of socks (having received around 30 pairs at Christmas), this seems like a few too many. On closer inspection, there are a few too many of everything.

If you’re one of the few folks left to play Fashion Designer, then each one of these icons is meant to be a different item of clothing. Duplicates are bad.

Our culprit resides within the texture cache, where everything has a specific lifespan before the texture is flushed. Certain flags on each texture get set when the texture is first accessed, and when it is finally swapped out for a new texture. Fashion Designer appeared to be rendering different objects to the same texture, and as such only the first use of the texture was correctly setting the cache flags. When the game went on to request more draws of different objects, the same object texture was being copied multiple times. By resolving this edge case, our full arrangement of clothing items can be viewed.

Remaining on quirky uses of textures for the time being, when you catch a Cicada in Yo-Kai Watch 1, a nice 2D image of the bug is meant to take up a large part of the screen. Unfortunately, due to a questionable if statement silencing the one log warning that would have told us immediately where the issue was, it has taken a fair while to track down the… bug.

For whatever reason, the team developing Yo-Kai Watch 1 decided to perform an image store on a texture that is a quarter of the width of the base format, but stores four times the data per pixel as an RGBA32 texture. If this sounds pointless, it is, because what is done with that image straight away? It’s accessed as an RGBA8 image, which is an incompatible format conversion!

Adding a copy dependency to these formats resolves the bug and restores the bug.

This was also the cause of major graphical corruption in Wet Steps. The game still has a few other issues, but the difference is major.

Super Mario RPG had impressively few bugs when it released in late November, but our eagle-eyed users instantly noticed that Mario himself, and a few environmental objects, were slightly dull. While Mario is getting on in years and has probably lost the sparkle of his GameCube youth, it turned out that bindless elimination was not working correctly in a couple of cases. In the event that a shader handle is assigned twice via different paths, bindless elimination was unable to be extended, as it is unable to find the handle operation. However, even if different paths exist, the value is actually always the same, as any relevant data is unable to be modified once inside the shader pass. By fixing this to simply pick the first value if multiple routes exist, Super Mario RPG renders correctly.

Another game that highlighted an unhandled edge case in bindless elimination was Detective Pikachu Returns. This one is more subtle, but extending through shuffle resolves cubemap reflections throughout the game.

Let’s take a short interim to talk through some of the smaller, but perhaps interesting changes that have taken place over the last couple of months.

- When amiibos are first used (via AmiiboAPI), the API json will be stored locally so that amiibos can be used offline.

- Our minimum macOS version has been increased to macOS 12 Monterey. We’re not sure why macOS 11 was chosen before, but it is wholly unsuitable for Switch emulation!

- Whole bunch of string, time and DateTime formatting changes. Fixes sorting being backwards for play-time, file-size and last-played and most importantly, removes a load of log spam if games have never been played.

- “Create Desktop Shortcut” actually works on macOS and also supports launching in fullscreen.

Alright strap in, it’s time for the “Ryujinx blog teaches you computer science” segment.

Sleeping

We’re all aware that sleep is important right? Unfortunately, as is probably relatable to many, it’s actually more difficult than it may appear to sleep for the correct amount of time. Undersleeping and then feeling exhausted, oversleeping and missing your train is an all too common experience in the modern world. Computers are not so different.

When they’ve finished their tasks, they’d like nothing better than to pull up their duvet and tuck in for a solid 6ms nap before work the following nanosecond.

Ryujinx needs to operate on a very tight schedule and the best way to do this is actually not to sleep. No desktop kernel is truly “real time”, in the sense that it is impossible for us to sleep in one instant and wake whenever asked. There is always variability and delay to our requests (pretty graphs later).

Alternatives to sleeping

The alternative to sleeping is called “spin-waiting”, in which the program retains control of the CPU and continually asks it to “spin” until an event is triggered, which will release the CPU from this spin cycle. Spinning can be used to take very granular control over when and how the CPU stops and starts execution of a thread within a program, but comes with a major downside: it’s still active and using power the whole time. Consider sleeping vs spinwaits to be the difference between sleeping and sitting in a waiting room. You’re ready quicker if requested from a waiting room, but you’re less rested than if someone called you from sleep.

The problem

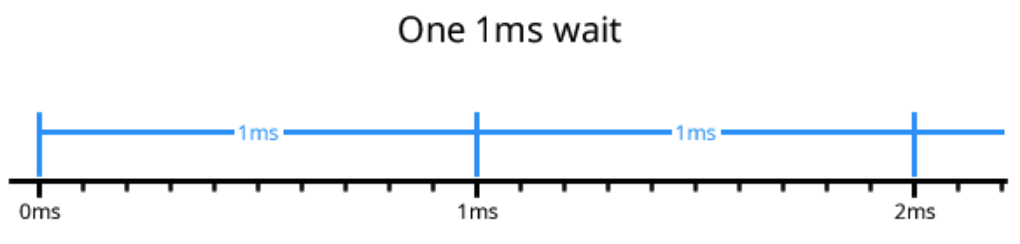

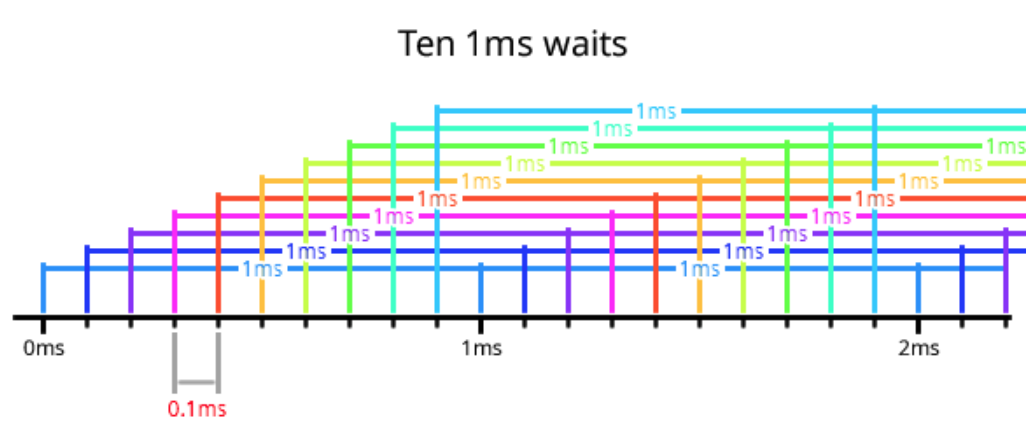

Now that we have the terminology out of the way, let’s look at the problem. If we have a single thread, monitoring a single event that wants to wait 1ms at a time, we have no problem at all.

Pretty much all three major operating systems will allow us to sleep with 1ms granularity, and be able to wake at the right time. However, consider this same scenario but with 10 different wait events on the same thread, all out of sync with each other by 0.1ms.

Now we start to run into issues. The solution we used up until the end of 2023 was to simply spin through these waits, but as you can see, this means spinning the entire time, as we need to be awake to handle the thread that is about to wake every 0.1ms.

We spoke in our last report about a change to `ServerBase` which stopped polling every 1ms. This reduced CPU utilization and power usage dramatically due to the problem above. ServerBase was a major contributor to the huge stack of concurrent waits we had to deal with. Unfortunately, it is only one of many thread types that request constant waits; game threads are just as significant a problem.

So how do we move forward? The CS nerds reading have been shouting “nanosleep!” at their screens for the last couple of minutes, and they’re half right.

Linux/macOS

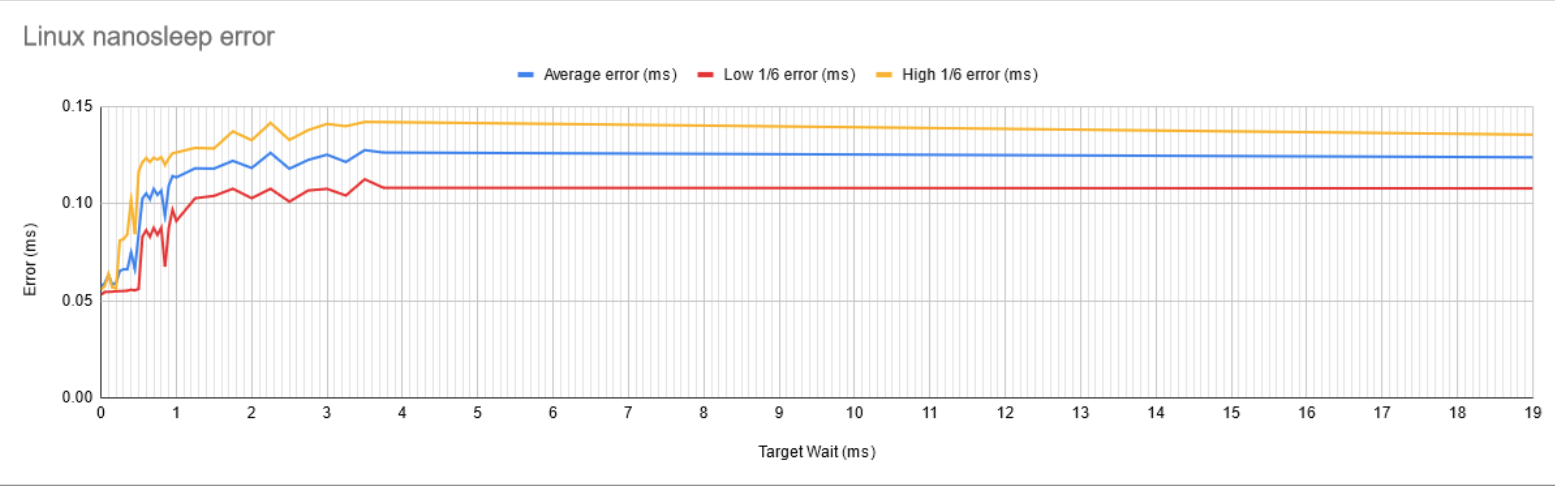

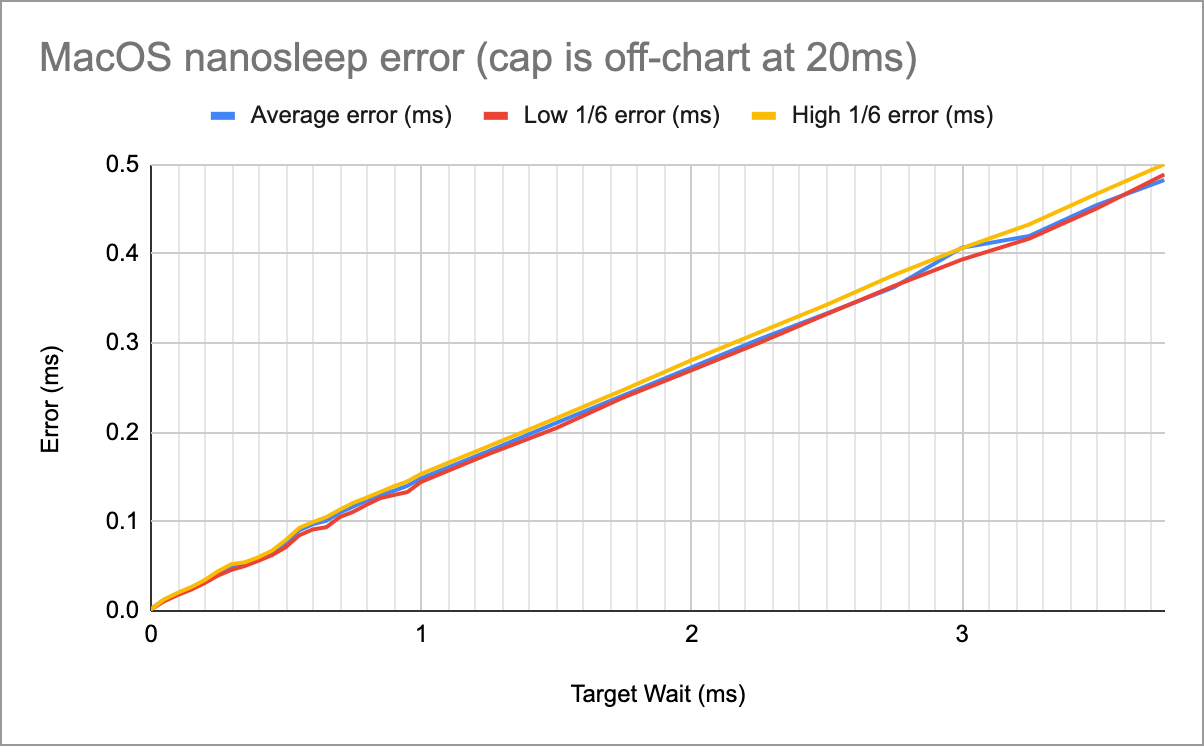

Linux and macOS both provide a `nanosleep` syscall to wait a precise number of nanoseconds. Nanosecond precision is more than capable of handling our above scenario, so let’s give it a whirl.

Upon testing, we see that nanosleep is not quite as precise as its name claims. At very low nanosecond values we see very consistent (and small) wake error values, but once we reach the threshold of 0.5ms, a huge spike in error occurs, which eventually levels off at around 1.5ms.

macOS curiously again has different behavior. At tiny wait requests, the syscall is remarkably accurate with minimal error on sleep requests down to the nanosecond. Unfortunately the error is directly proportional to the wait time requested, and keeps climbing until we see almost 0.5ms of delay when asking for a 3ms sleep, and over 3ms delay when asking for 20ms of sleep.

While these sound bad, the use case we wanted was already for those smaller sleep times, so both macOS and Linux have an efficient and easy way out here via nanosleep.

Let’s talk about Windows…

Windows

The Windows NT kernel is, by far, the least “real time” of the three major options presented. It has no nanosleep equivalent, and as such is highly limited in how to deal with our sleep problem. By default you can sleep to an accuracy of 1ms which, as we hope to have reiterated, is not good enough for as low as two concurrent waits (each 0.5ms apart) let alone 10. Is all hope lost then? Is 1ms really the best we can do? We’re pretty smart so the answer is: hell no.

On most x86_64 systems, you can perform a query to the clock resolution and discover that there is actually a 0.5ms resolution “base clock”, and that perhaps more interestingly, by default, any waits you perform will align to the nearest “base clock tick” automatically. If you sleep with no thought, this means your thread may wake late due to alignment with the next base tick. However, if you have this information, and make a very smart guess about when the next tick will occur, you can time your sleep to 0.5ms precision and nearly always wake right before it.

None of this solves our 10 concurrent 1ms wait issues though. If we detect that a wait event is base-clock aligned or very close to, we can allow the system spoken about to align the waits and wake just before the next clock tick. If the wait is not base-clock aligned (or extremely precise like 0.01ms), then there is unfortunately nothing that the NT kernel provides us to solve this, beyond continuing to use spinwaits where needed.

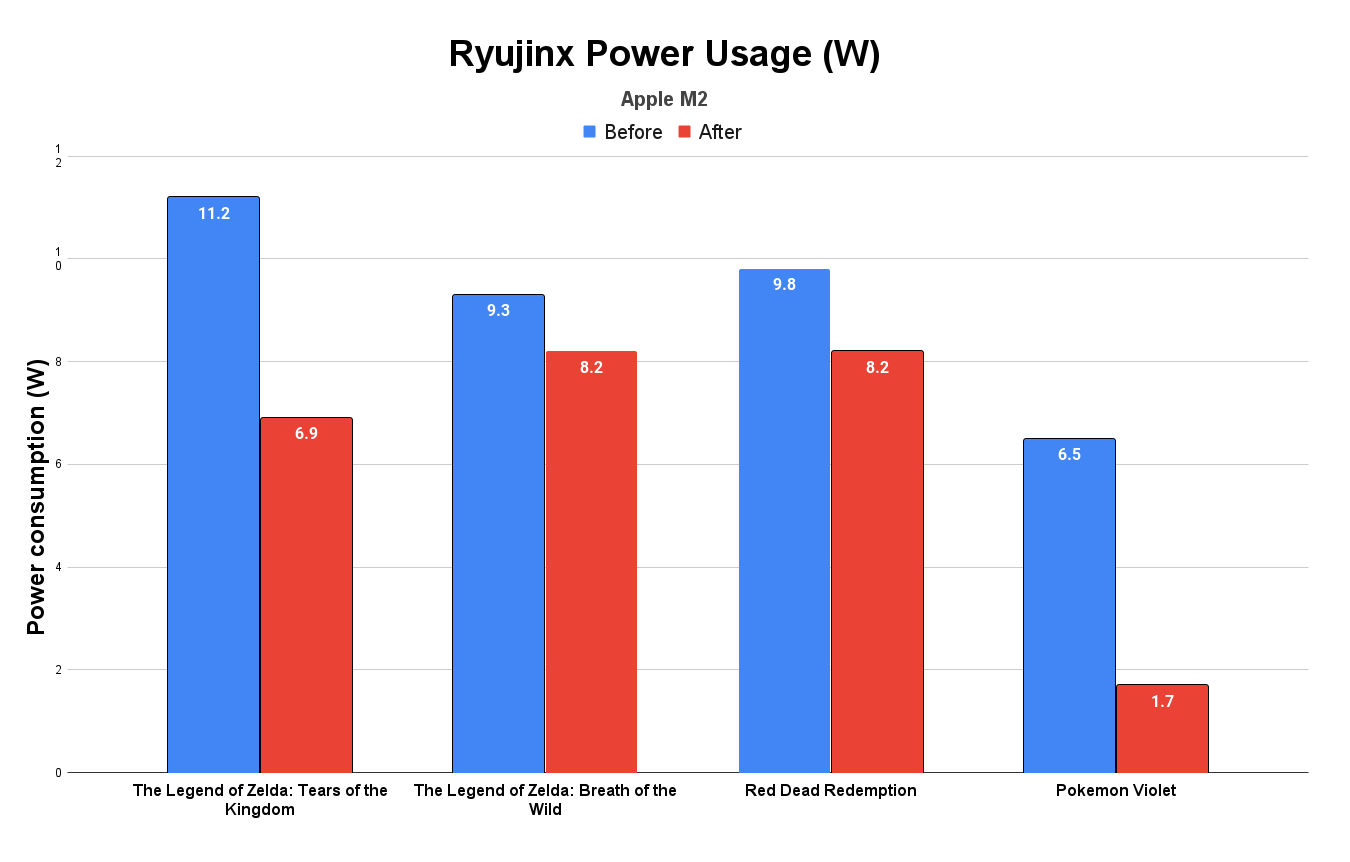

Class dismissed, let’s look at what all of this actually does. We said before this whole thing is about CPU usage and power draw, so some more graphs seem to be in order.

Apple and Linux devices will be seeing the largest benefit here, with some seriously impressive efficiency gains at equal frame rate and resolution. Tears of the Kingdom is being slashed by almost 40%, Red Dead Redemption and Breath of the Wild both see very healthy 15-20% shifts, and Pokémon Violet practically sheds everything with a 75% reduction. For perspective, that is an M2 MacBook Air emulating Pokémon Violet more efficiently than the Switch plays it natively.

Areas of games that don’t really do much like title screens, or when emulation is paused, see some wild reductions on devices like the Steam Deck.

These changes can result in additional hours of battery life while reducing the magnitude and frequency of thermal throttling, particularly in fanless devices such as the MacBook Air family. It also allows devices to reach and maintain their boost clocks during the times they’re actually needed, rather than being continually tricked into boosting on menus and when paused. If none of that sounds cool then we saved you some money on your next energy bill, take it or leave it!

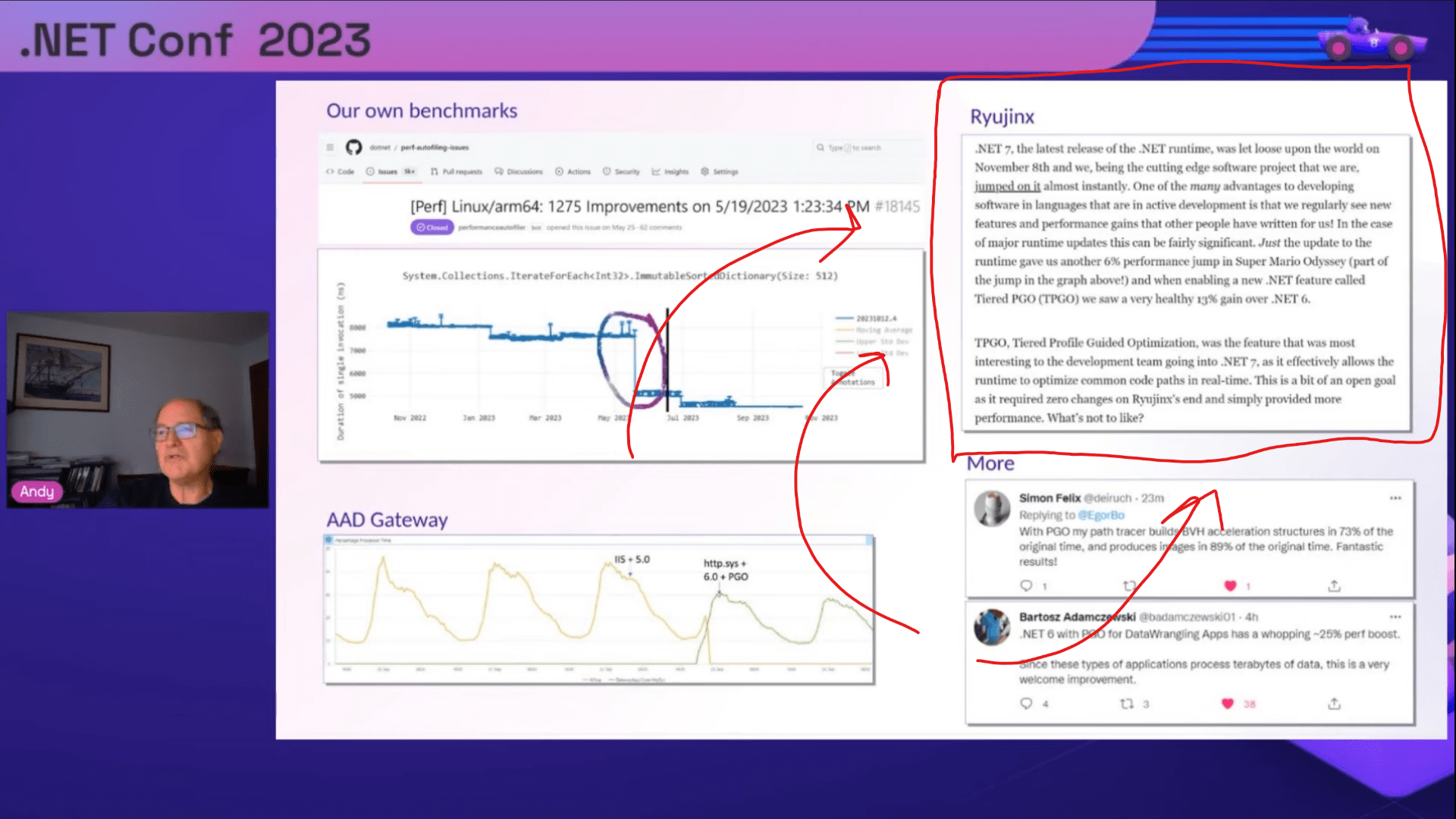

Lastly, for those of you who find it interesting that we decided to write this thing in C#/.NET, we had a new and shiny .NET version to update to in November: .NET 8 (they grow up so fast…). Microsoft always provides an absolutely huge document on all of the performance improvements they’ve brought to the table each year, but for us, they can usually be distilled into our patented Super Mario Odyssey pole benchmark.

This very blog also got a very cool mention during .NET conference 2023 as part of their excellent talk on Dynamic PGO. Check that out here.

2023 roundup!

So how did 2023 go for us overall? Well let’s take a look, shall we?

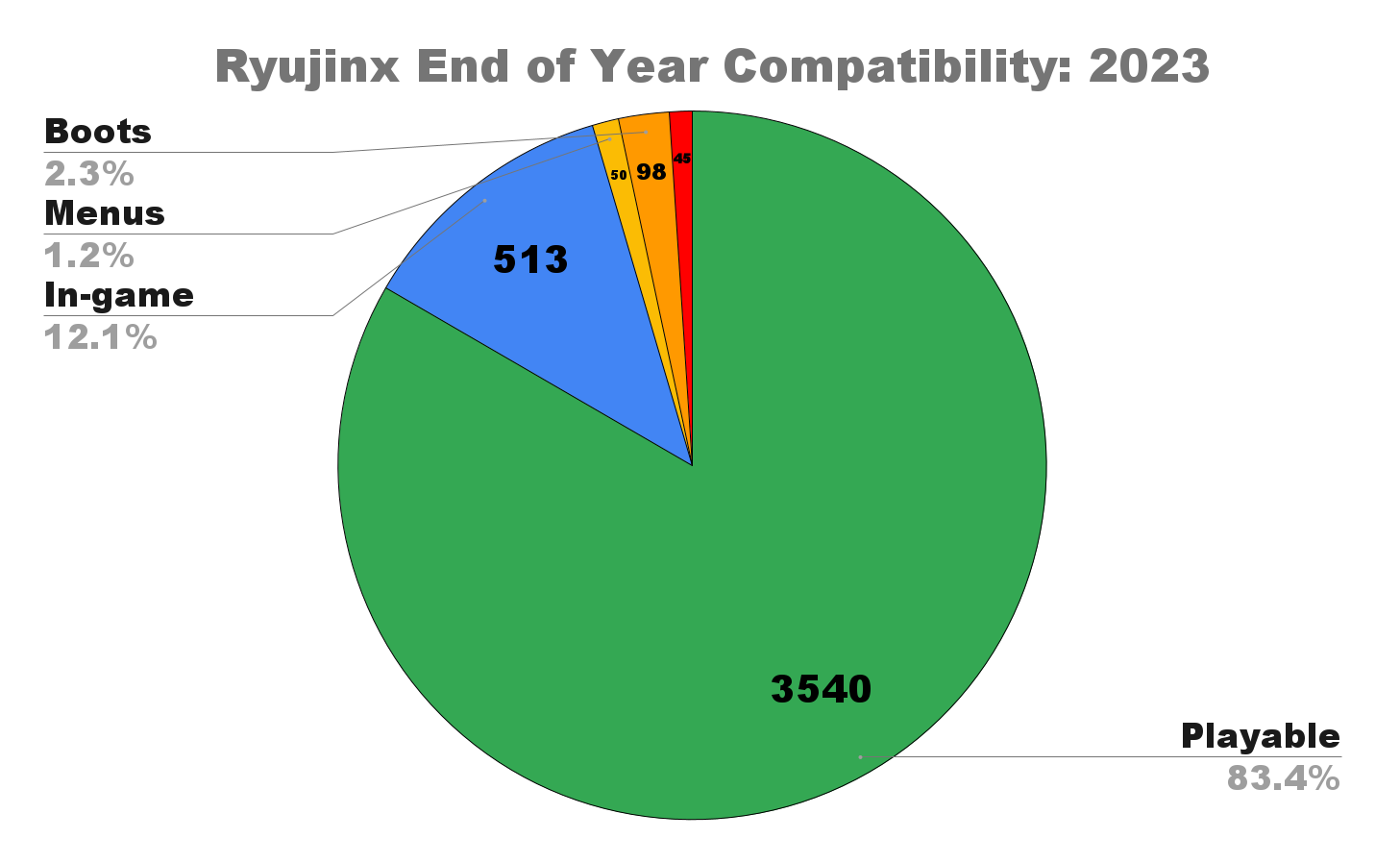

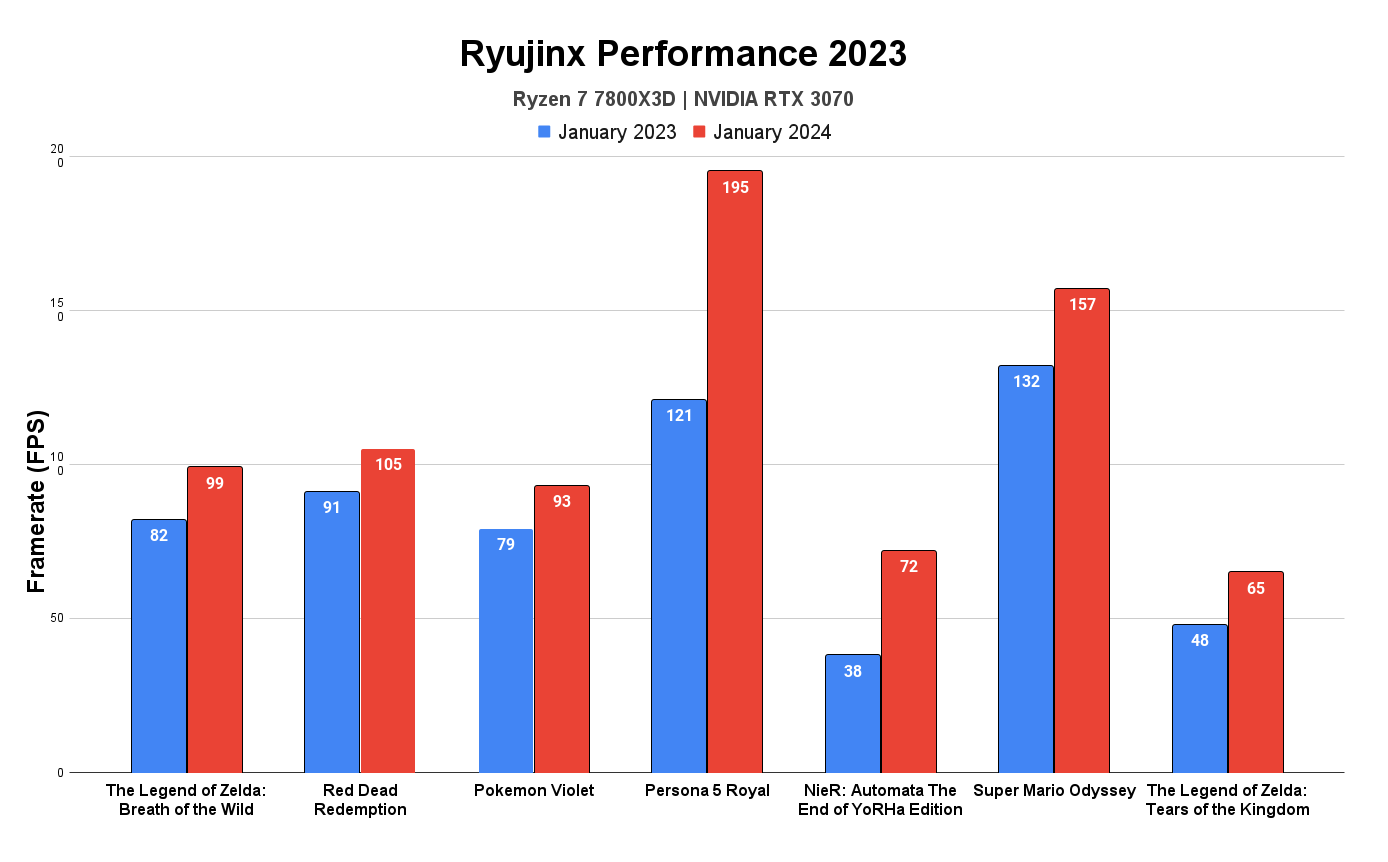

Hope you all aren’t bored with graphs yet.

We added over 700 games to our compatibility list during 2023 which brings the total for games tested (and reported) on Ryujinx to 4255. Over 83% of those are reported as having no graphical, technical or stability issues at all, with a further 12% of titles having at least one problem. This category is mostly filled with titles that have minor graphical glitches or stability issues. In other systems of marking, they may be considered playable also. The remaining 4.5% of titles only progress as far as the menus, if at all.

As far as performance goes, we had a year on year average improvement of 36% in our own usual suite of games. As usual this is highly game and hardware-dependent.

We’re not quite sure what happened to Persona 5 and NieR, but both have seen a 61% and 89% improvement respectively. Our two Zelda games tested jumped by 20% and 35% (Tears of the Kingdom does indeed run on our January builds), and our classic benchmark of Super Mario Odyssey continues to keep climbing no matter what we do.

All in all, a great result and as usual, all of this just works. With no need to fiddle with settings for different games, the out-of-box experience is the best it’s ever been.

2023 sure had plenty of game releases so let’s jot up just how many Ryujinx was right in the action for, running on day 1!

- The Legend of Zelda: Tears of the Kingdom ✓

- Super Mario Wonder ✓

- Super Mario RPG ✓

- Pikmin 4 ✓

- Metroid Prime Remastered ✓

- Sea of Stars ✓

- Octopath Traveller II ✓

- Fire Emblem Engage ✓

- Kirby’s Return to Dreamland Deluxe ✓

- Advance Wars 1+2: Re-boot Camp ✓

A ridiculous list of titles, first party and otherwise being playable with no changes always fills us with pride. It would be wrong of us to omit that a couple of these games (Tears of the Kingdom among them) did need some additional love to reach our standards, but the majority of the year has gone without a hitch.

Closing words

That’s all from us this fine January. We hope you’ll stick around for another year of this madness because we’re sure that 2024 will not be dull!

Onto our scheduled sales pitch: if you would like to contribute to Ryujinx then there are many ways in which you can assist us. Knowledgeable in emulation, graphics development or even just use C#/.NET in your day job and want something cool to stat-pad your resume? We’re always looking for more folks to check out our GitHub. Fix bugs, add features, or our personal favourite: stare blankly at Visual Studio while imposter syndrome slowly creeps over your shoulder.

If you couldn’t write “Hello World” in English, let alone a programming language, you can still help us fund our development time and other costs monetarily via our Patreon, or help us with testing games and helping fellow users figure out this whole emulation thing on our Discord.

Until next time!