Progress Report January 2024

February is here which must mean that January has slid by once again.

While this time of year isn’t usually so hot for those Twitter-melting AAA games, we all sometimes need less action-packed schedules. Ubisoft proved they still have some ability to make a compelling video game, and potential game of the year: Turnip Boy Robs a Bank, proves that just about any idea can make it!

Opinions on games aside, it’s time for the usual agenda.

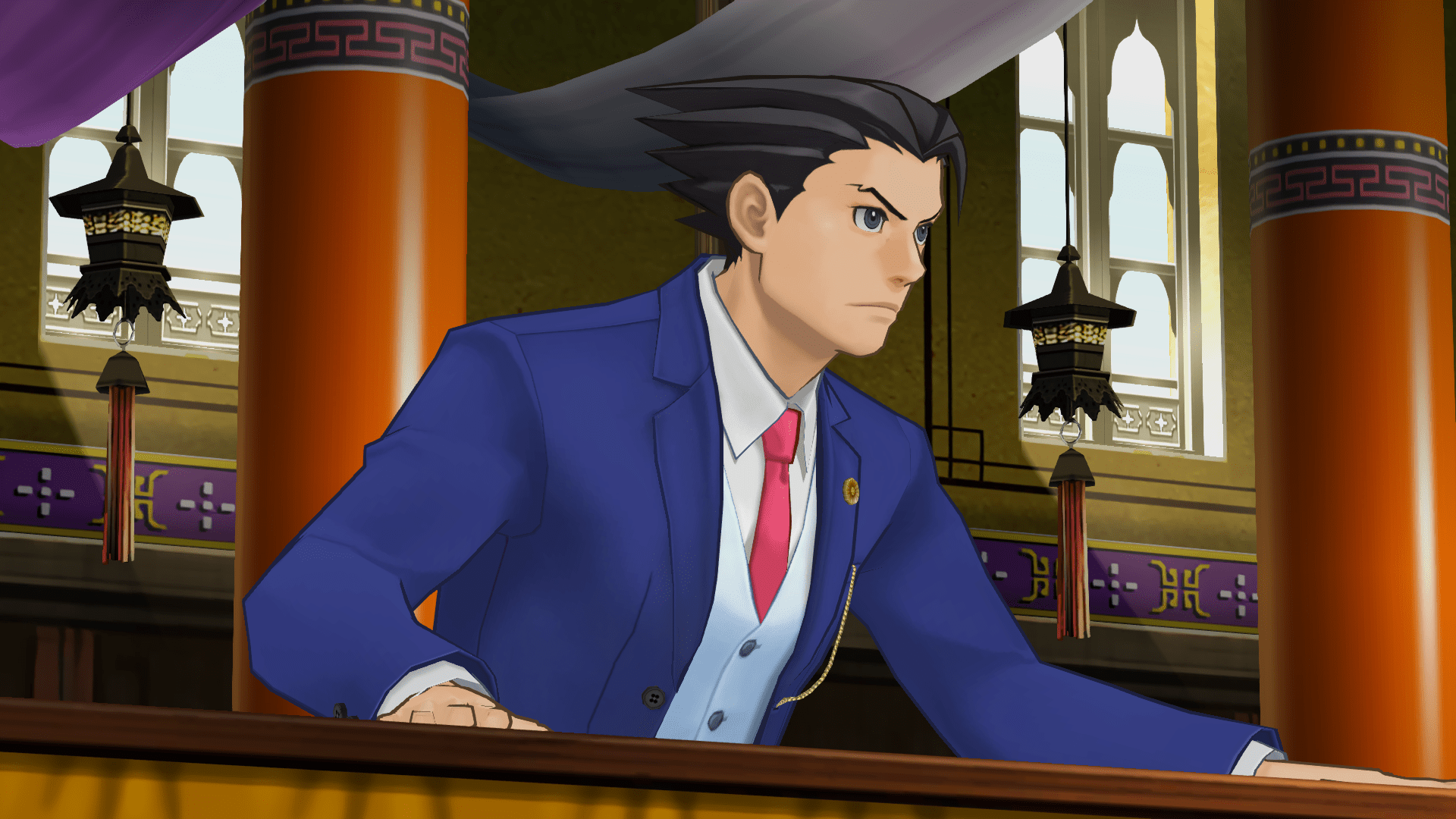

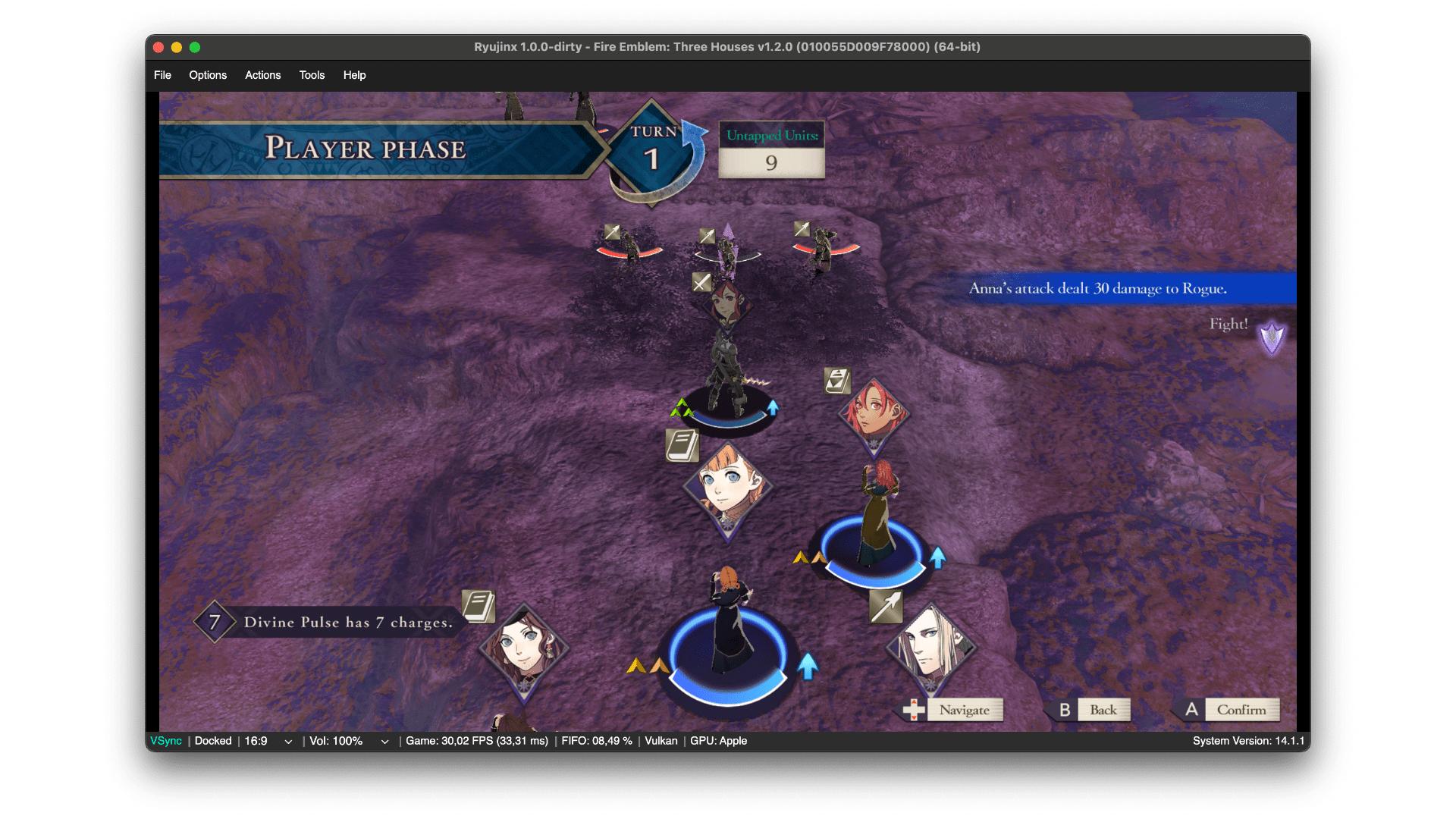

As we’ve perhaps highlighted before, macOS as a platform is a little bit of a nightmare as far as 3D graphics go. Everything for Ryujinx needs to go through MoltenVK which, while a lifesaver, is not immune from frustration. On all platforms, if a shader compilation fails (usually caused by an invalid output from the game), we can attempt to skip the draw to avoid a complete crash of the program. There is however a weird case on macOS where some pipeline variants are A-OK, but some will fail; it’s this final case that could still cause a consistent crash in games like Fire Emblem: Three Houses.

Adding conditions to return in more failed cases does not 100% fix the graphical rendering, but it does avoid the crash and actually allow users to provide us logs for future pipeline issues!

As proved above, GPU drivers are often heavily overlooked in how much they actually impact nearly everything. Many people assume in general they're relatively similar outside of certain features, whereas in reality they are some of the largest and most complicated programs ever written. They all have different and individual paths for operations that may or may not be designed around specific hardware or tailored for specific software. One of the reasons that we, and in general a lot of 3D application developers, give Nvidia a lot of praise is that their driver is ridiculously resilient to the developer taking almost any route. Because of this, it is often easy to spot large discrepancies in rendering costs when, on paper, two devices may be equally matched.

Thus, there are a few in-flight changes that are attempting to reduce the cost that other drivers place on operations, with the first of which being the use of templates for descriptor updates.

The main beneficiary with template use is the RADV Mesa driver, which can see global improvements of up to 5% in framerate, and about the same drop in Vulkan backend time%. For comparison, Nvidia saw no improvement in framerate and a backend time cost reduction of 0.6%. They were already handling the suboptimal path extremely well.

Citizens Unite!: Earth x Space was problematic to play prior to January unless wearing an eyepatch, or something similar that would impair a chunk of your vision. When some draw commands trigger, they can pass parameters “inline” which does not update any of the 3D engine state. If another draw is requested that utilizes state variables, then the draw would have incorrect data from which to construct the image. By forcing a vertex buffer update when the switch between drawing types occurs, the issue was resolved.

Unusually, this abruptly concludes our section on GPU updates.

However, the resources saved here allowed a pretty major piece of work to be completed by project lead gdkchan.

ARM devices are having their own renaissance period at the moment after Apple made a statement piece with their M1 line of devices. No more is the future looking like the architecture will purely be the domain of mobile and small form factor products. Microsoft seems to finally be stepping up with the Windows on ARM efforts, Linux is already in a great spot, Apple has been all-in since 2020, and we’re starting to see chip designers like Qualcomm enter the PC marketplace.

Ryujinx already works on any ARM device that runs a supported OS via our JIT compiler, but this is often extremely wasteful when you consider that the Switch itself is also ARM-based. On macOS devices, we currently use the Hypervisor services that Apple provides in order to (almost) natively execute Switch code with near zero overhead, but this is not a particularly global solution to the problem. We cannot make use of the Apple hypervisor anywhere but macOS, and while we could implement more solutions targeting Windows and Linux, the code bloat would be massive.

We decided on a hybrid approach of still JITing the code, but with new ‘lightning’ paths.

All Switch code is still being passed to our JIT compiler but, when running on an ARM CPU, now with the ability to look at a code block and check if anything actually needs recompiling. In the best case scenarios it can act as a zero-cost passthrough similar to a hypervisor, and at worst it will still do a fraction of the work an x86 system would need.

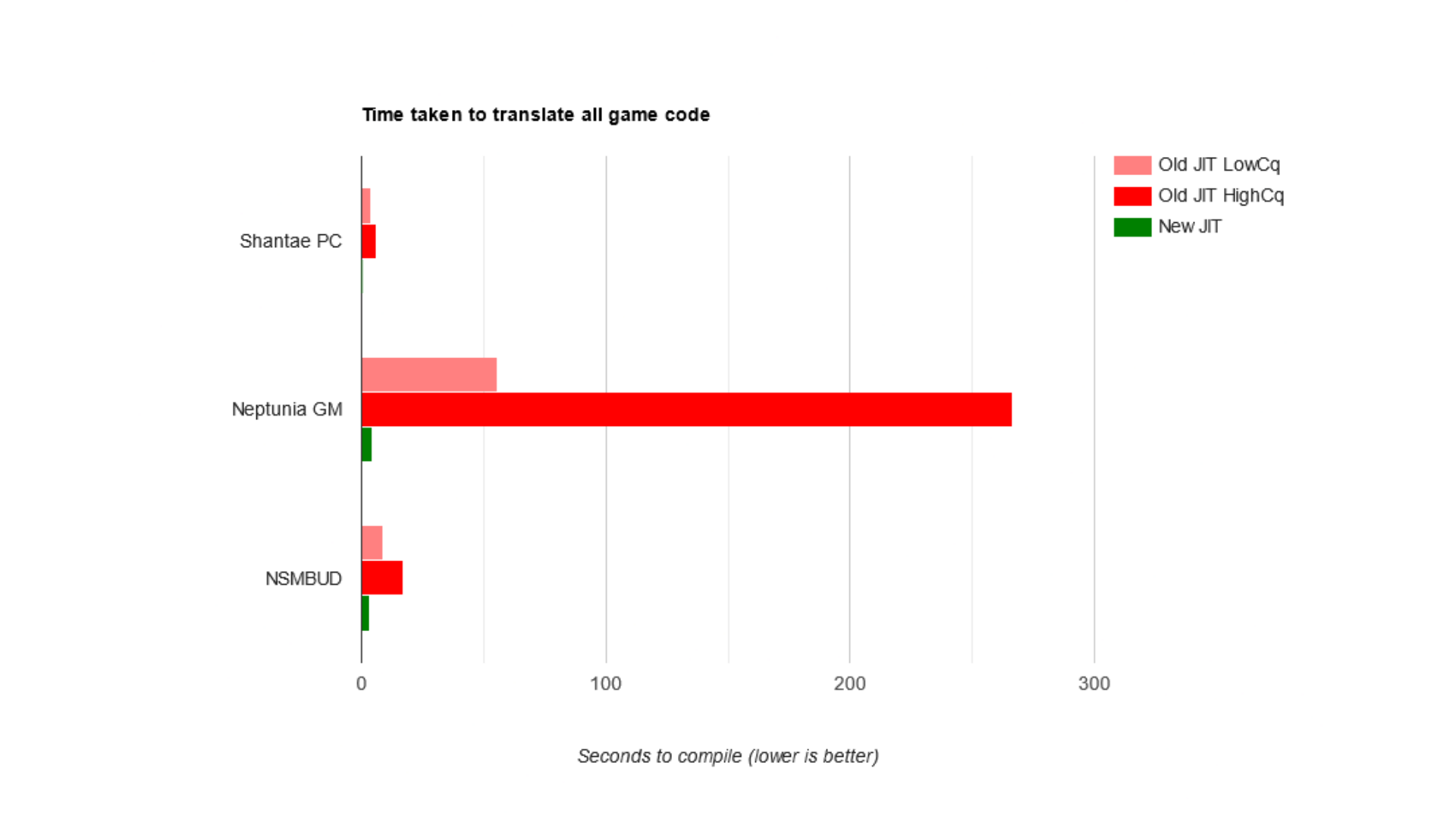

The graph above plots the time it would take for an Apple M1 chip to recompile all of the code in a game binary under certain conditions.

Pink = Full recompilation with focus on speed over code quality.

Red = Full recompilation with focus on code quality over speed.

Green = Lightning recompilation where needed.

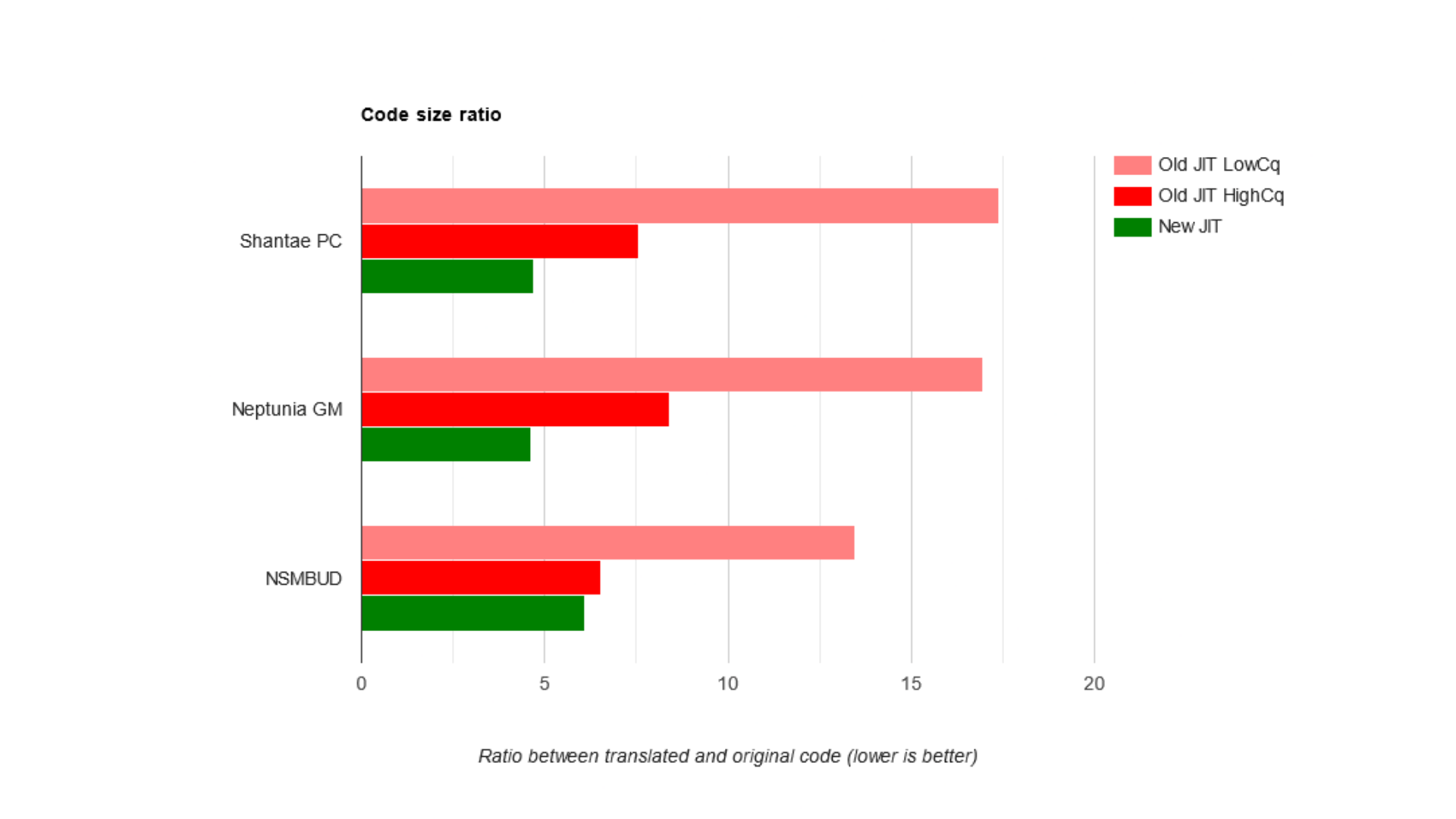

Okay so speed is absolutely not a problem. But as the Old JIT had high and low code quality modes, how does code quality itself stack up (think of this as binary size or “number of instructions”)?

The takeaway is that the new JIT can produce better code, and do so much faster than the old JIT. The most dramatic difference is seen on Neptunia, which has the largest code size. It took 4.5 minutes to compile with the old JIT on HighCq mode, while the new JIT took only 4.64 seconds. The old JIT produced 633MB of code in the HighCq mode, while the new one produced 348MB. This is almost half the size, while taking a fraction of the time.

Many may recognise that New Super Mario Bros. U Deluxe (NSMBUD in the above graphs) is actually a 32-bit game and cannot be executed natively via hypervisor or any other method without a JIT. By focusing on an approach that not only considers 64-bit titles, we can significantly reduce the overhead on games like NSMBUD or Mario Kart 8 Deluxe that have been a major pain point for ARM64 devices, with the easiest showcase being boot times.

With this, we should have an excellent foundation for future ARM devices on our supported platforms that is low maintenance and not dependent on any specific frameworks (Apple users just got lucky this time!). New ARM64 Linux builds are already available and being used for some cool stuff. The folks over at the Asahi Linux project (Linux for M-series Macs) have already been using us to stress test their OpenGL 4.6 driver!

We’re sure that some of you are asking why we aren’t pursuing an alternative approach dubbed “Native Code Execution” or “NCE”, which has been massively popularized by Switch emulators on Android devices. There are a few reasons why NCE is not our preferred response to tapping the potential of ARM devices:

- It is impossible to run Switch system instructions like service calls directly, even on an ARM device, as they are specific to the Switch OS & Kernel. As such an NCE approach requires patching of the game ROM to redirect these instructions into host emulator calls. These modifications give the game access to the host and are fully visible to the guest program.

- Interrupting the guest threads becomes somewhat complicated, since we can't insert "interruption points" in the code. We can use

pthread_killon Unix-like OSs, but Windows has no such a thing. - The code will access the emulator address space directly, so we need to set it up in a way that makes the game happy with the allocated guest region being contained entirely inside the guest address space.

For platforms with 16KB page size, there are additional challenges as we can't map the text segment and data segments as RX and RW respectively, since they are 4KB aligned, not 16KB aligned. We also can't just map them all as RWX if the platform has W^X. Making this work would require even further patching of the executable.

However, given that NCE certainly does have its advantages, it is potentially worth looking into again in the future. Right now however, we valued a consistent approach that integrated cleanly into the JIT project, offers near native execution speeds and very importantly, improves 32-bit game execution immensely as well.

After that heavy section, we’ll blitz through a few service changes that were finalized in January:

- Implement the arp:r & arp:w services on the new IPC. These are known as “glue” services as they manage processes like launching applications and application metadata. Migrating these to our newer service system is a requirement for other services to follow.

- Integer overflow on downsample surround to stereo has been fixed in the audio services. This was causing some crackling and distortion when using the SDL2 audio backend.

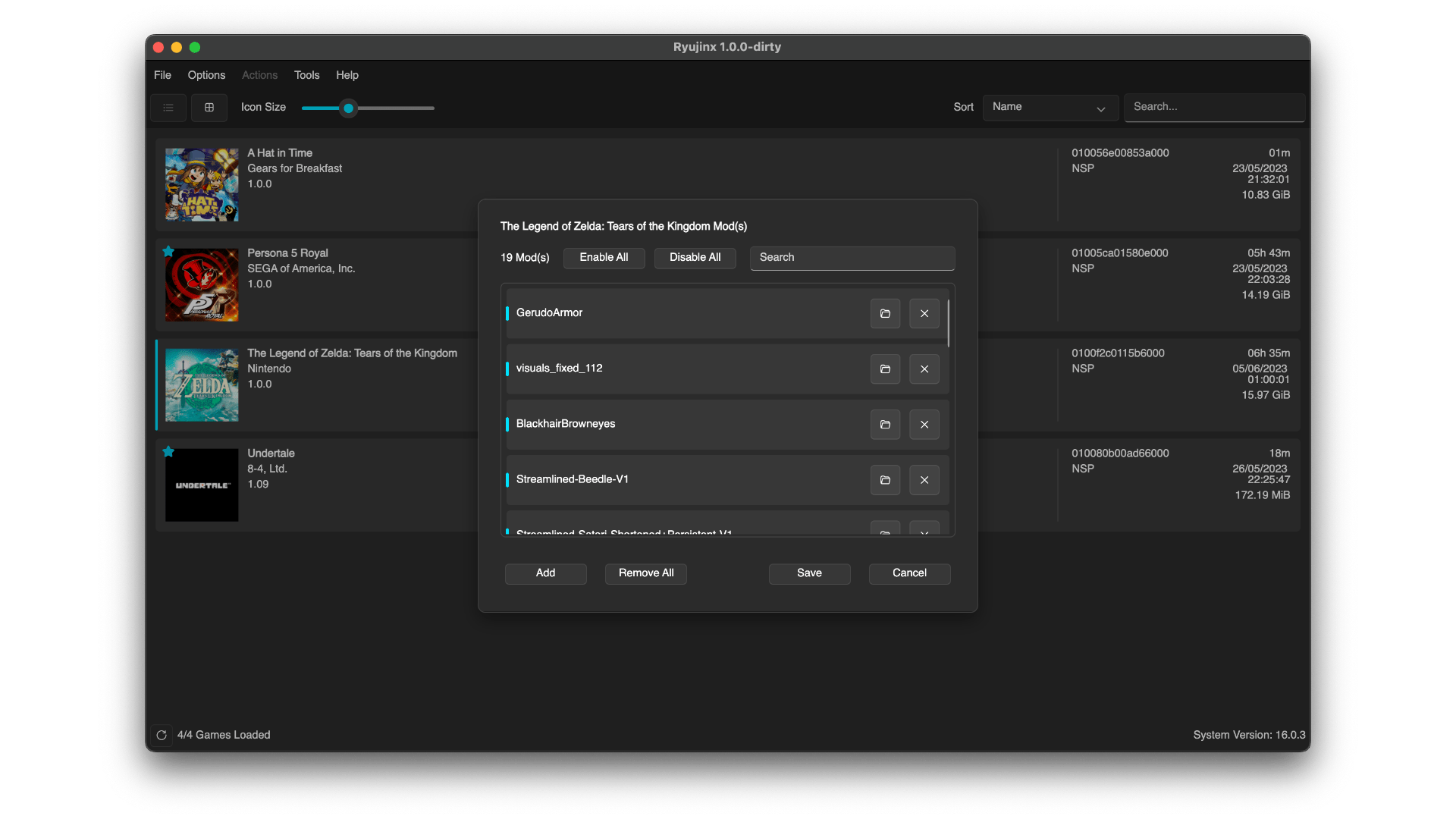

- LayeredFS mod loading is now performed in parallel to improve game boot speed when many mods are being applied. Modifications should now lazy-load when the changes are required.

GUI side we have seen a few major additions to our Avalonia frontend.

Right-to-left language support was added, and after many years and countless requests, a mod manager is now implemented which will allow users to add any number of game mods, and actually be able to turn them off!

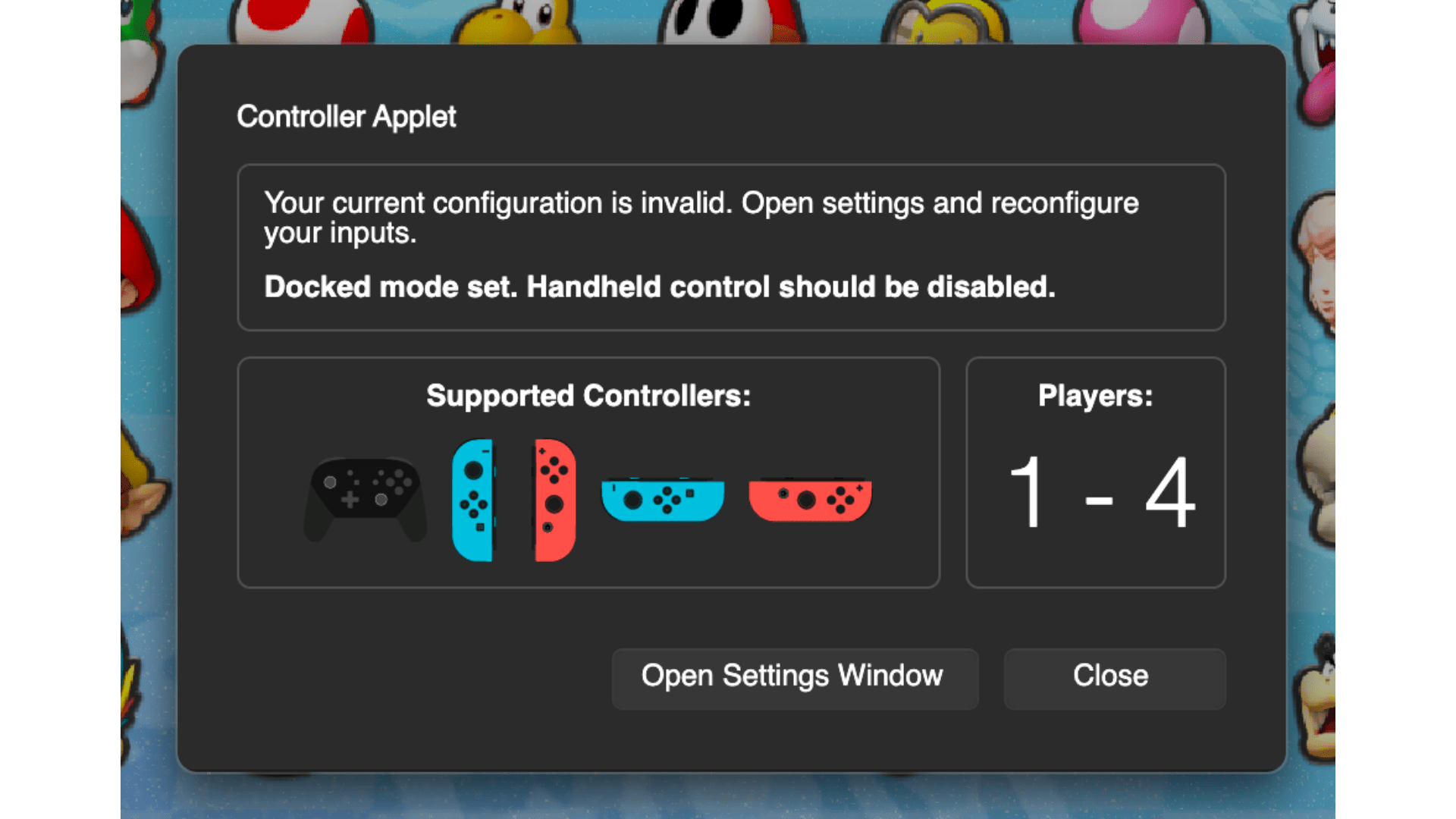

Additionally, the HLE controller applet was reworked a little to offer something more visual as a guide for supported players and controllers.

The applet will now show icons of which controller types the game is requesting and how many players it will accept. In general, this was one of the more confusing applets due to the “wall of text” effect, and the fact that older consoles simply didn’t care as much as the Switch does about player/controller combos.

On the topic of player/controller combos, a better system of identifying controllers on disconnect/reconnect was implemented. Previously, the system relied on the controller ID (standardized for a specific controller) and also the global index which is assigned at connect time. Unfortunately, this global index would change depending on when and how the device was connected, resulting in devices that were not assigned the correct profile, or not assigned at all on connection. The system now uses a separate index in addition to the GUID to track devices, resulting in much higher consistency to added or reconnected controllers actually getting their profiles loaded correctly.

For those who are, very patiently, waiting on further updates from us on the UI development side, we last mentioned that one of the major blockers for our Avalonia switch was Linux side for Steam Deck users. We're finally happy to report that the root cause is an annoyingly simple one-line fix. The end of the tunnel is nigh!

Closing Words:

As we enter 2024 en-masse, we are all incredibly thankful for everyone’s support towards this project. It’s been 6 years so far and whether it was through Patreon, reporting bugs, or code contributions on GitHub, or assisting other users in our Discord, because of all of you, the motivation and goal list is still just as high as it was in 2018. We are truly in awe of how far this project has come, so once again thank you!